OPTICAL COMPUTING

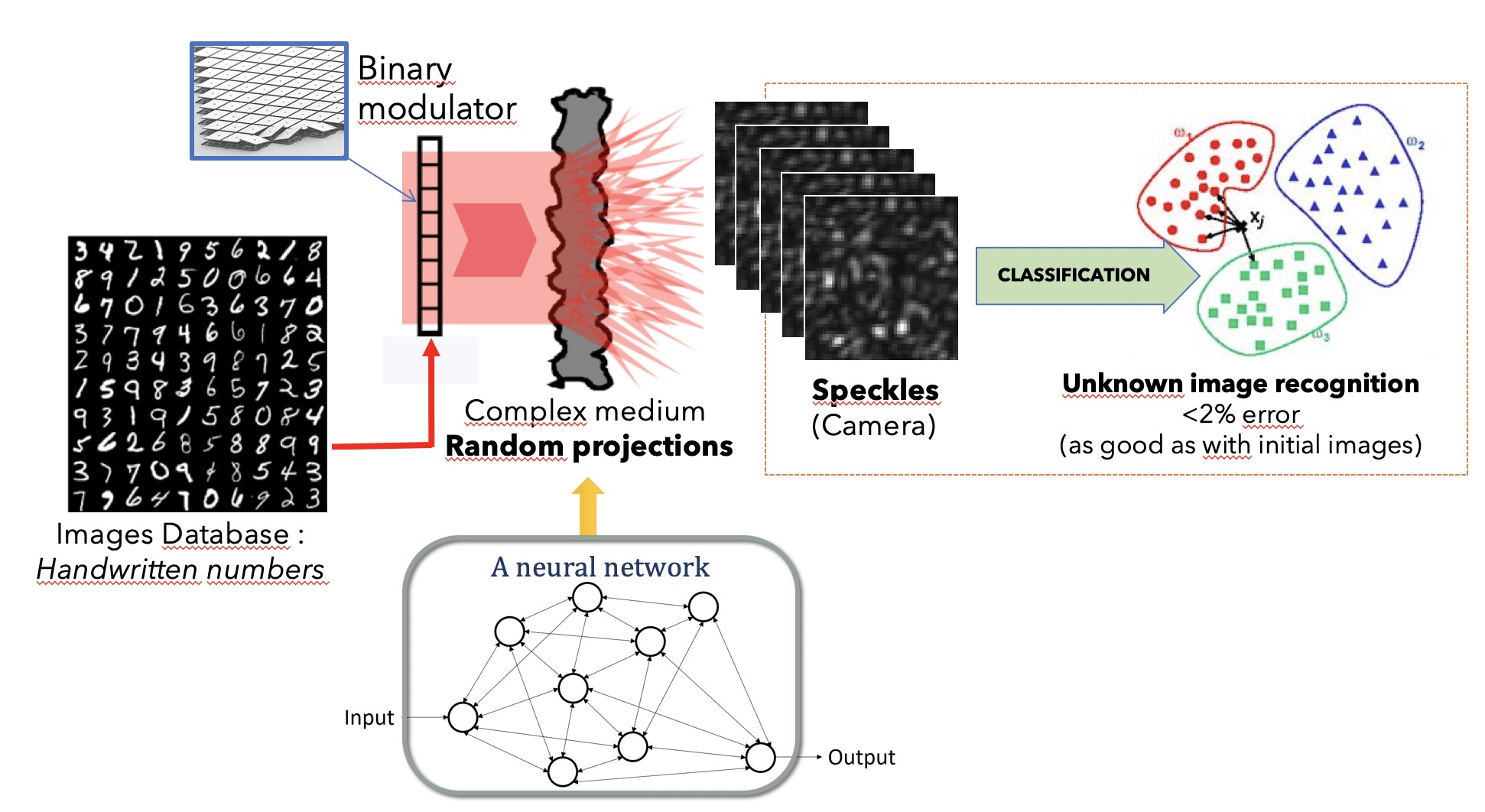

IMAGE CLASSIFICATION THROUGH A SCATTERING MEDIA

Random projections have proven extremely useful in many signal processing and machine learning applications. However, they often require either to store a very large random matrix, or to use a different, structured matrix to reduce the computational and memory costs. Here, we overcome this difficulty by proposing an analog, optical device, that performs the random projections literally at the speed of light without having to store any matrix in memory. This is achieved using the physical properties of multiple coherent scattering of coherent light in random media. We used this device on a simple task of classification with a kernel machine, and we showed that, on the MNIST database, the experimental results closely match the theoretical performance of the corresponding kernel. This framework can help make kernel methods practical for applications that have large training sets and/or require real-time prediction.

This work was the seed for the founding of spin-off LightOn (see below)

Publication(s)

- A.Saade, F. Caltagirone, I. Carron, L. Daudet, A. Drémeau, S. Gigan, F. Krzakala Random projections through multiple optical scattering: approximating kernels at the speed of light IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) Shanghai (2016) link

Publication(s)

- J. Dong, M. Rafayelyan, F. Krzakala, S. Gigan, Optical Reservoir Computing using multiple light scattering for chaotic systems prediction IEEE Journal of Selected Topics in Quantum Electronics, 10.1109/JSTQE.2019.2936281 (2019) link

-

J. Dong, S. Gigan, F. Krzakala, G. Wainrib, Scaling up Echo-State Networks with multiple light scattering ,IEEE Statistical Signal Processing Workshop (SSP), 448 (2018) link

Reservoir Computing

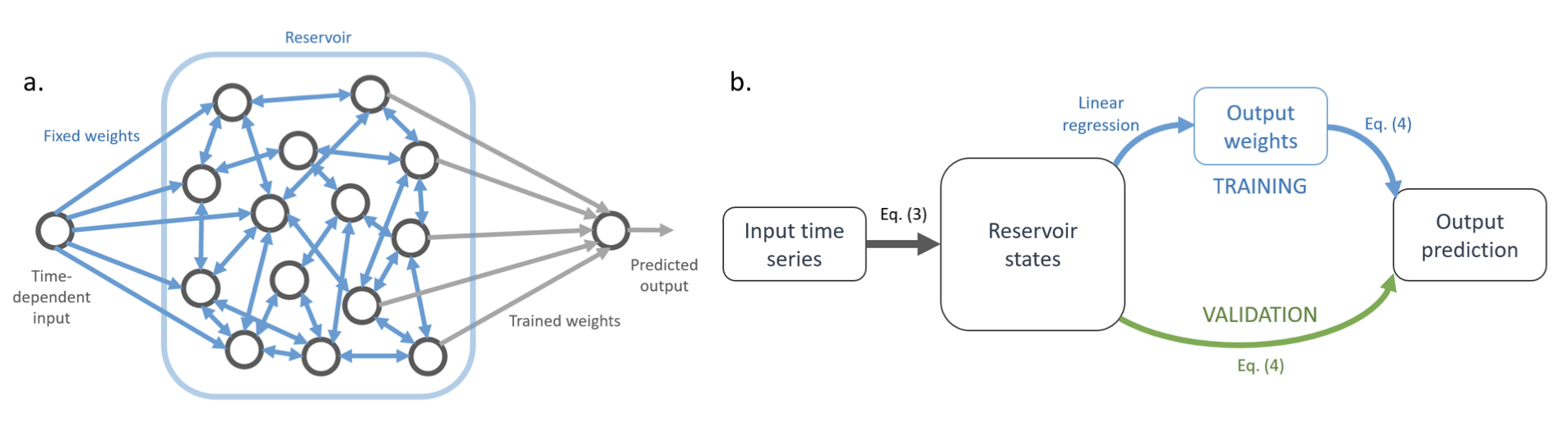

Machine learning is a powerful tool to learn patterns and make inferences in complex problems based on a large number of examples. It relies on models with tunable parameters, for example Neural Networks with interconnection weights between neurons tuned to perform a particular task. In supervised learning, these weights are trained using a large number of examples, which consist in pairs of input data with the desired outputs. Thanks to increasing computational power and a large amount of available data to process, they have achieved state-of-the-art performance on very diverse tasks, such as image recognition [29], Natural Language Processing, or recommender systems [30] to name a few. Today machine learning is a blooming field, and there is a number of other machine learning approaches beyond neural networks such as kernel methods and decision trees. Computational efficiency is a major research direction, as there is a strong need to scale down the heavy machine learning computations in smaller devices.

Recurrent Neural Networks are notoriously hard to train [31]. Recurrent connections are a challenge for error back- propagation, which is the method of choice to train neural networks with feed-forward connections such as Convolutional Neural Networks. As a possible solution to bypass this training issue, Echo-State Networks (ESNs) are Recurrent Neural Networks with randomly fixed internal weights. Only the output weights are trained for a particular task, reducing the training to a simple linear regression. The number of tunable parameters is thus smaller, but this does not necessarily mean that this Neural Network model is less expressive than fully-tunable Recurrent Neural Networks. It is very easy to increase the number of neurons and it has been proven that large networks can universally approximate any continuous function.

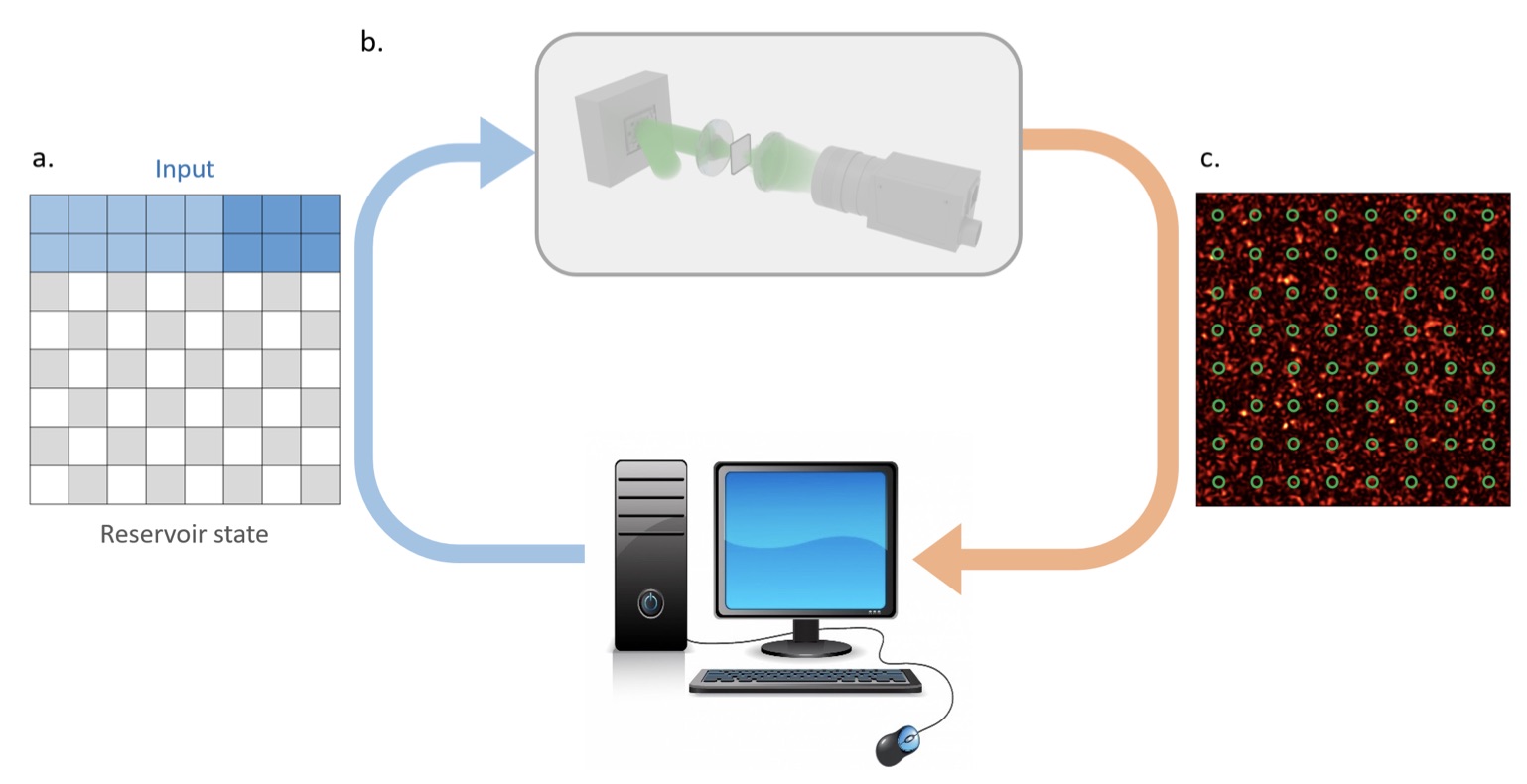

Echo State Networks are a specific case of Reservoir Computing, a relatively recent computational framework based on a large Recurrent Neural Network with fixed weights. Many physical implementations of Reservoir Computing have been proposed to improve speed and energy efficiency. In our lab, we study new advances in Optical Reservoir Computing using multiple light scattering to accelerate the recursive computation of the reservoir states.

We have implemented in the group reservoir computing exploiting multiple scattering media, together with a camera and a spatial light modulator. The medium embodies the random connections, and the recurrences is obtained by sending the camera images back on the spatial light modulator for the next frame.

Spin-off LightOn

Following a collaboration with Signal Processing and Machine Learning Expert, where we demonstrated image classification through a scattering medium, the startup LIGHTON was created.LightOn is a technology company developing novel optics-based computing hardware. LightOn develops a light based technology required in large scale artificial intelligence computations. Our ambition is to significantly reduce the time and energy required to make sense of the world around us.